Synopsis

Starsky Robotics was working on an autonomous vehicle solution with a clever twist. They would use a remote-driver (aka teleoperation) for anything their AI system couldn't handle.

I was responsible for the teleoperation program. When I started, Starsky had a rough prototype that could drive 10 mph on a straight road. By the time I finished, we had control centers in California and Florida, with control stations capable of operating trucks in live traffic.

The challenge

The founders wanted a working demo in less than 100 days

We needed to demonstrate a “gate-to-gate” trucking run using only the teleoperation and autonomous systems.

My role

Senior UX Designer, Product Manager

I was responsible for:

- Product ownership: teleoperations

- Software & hardware design

- UX research & design

- Coordinating teams for access and resources

- Product roadmap

INITIAL ASSESSMENT

The prototype required a team-effort to drive 10 mph on a straight road

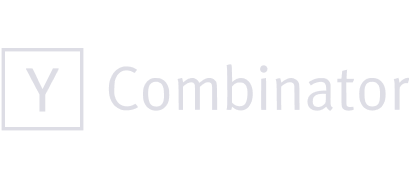

The prototype control station was a video game racing seat with three small 27-inch screens. The center screen was a 180° panorama from inside the cab. The far left and right sides were "side mirror" cameras. Admin controls and settings were displayed in the bottom right corner.

The image from the 180° camera was not optimal. The view out of the windows was distorted, warping down to the right. The HUD indicators are speed (kph) and bars to compare steering-wheel rotation between the controller and truck.

Driving tests took place in a busy open-office environment. The remote driver and safety driver in the truck could not talk directly. All communication was relayed by engineers on cell phones.

UX Design

Ergonomics

Driving figure eights in the new teleoperation station

The easiest way to drive adoption for an experimental system is to make it feel natural and familiar

We relocated the control station to a private room, so drivers could operate the truck without distractions.

Next, we upgraded all the equipment to make the driving experience more true to life. Everything was modular and adjustable for better ergonomics.

Microphones and speakers were installed in the control room and truck, so the remote driver and safety driver could speak directly.

Poor video quality and visibility was an ongoing challenge. Some of the solutions include:

- Apply a matrix algorithm to warp the video to fit the screens

- Using two different cell phone services for redundancy and to reduce the risk of dropped cell signal

- Repositioning the camera in the truck for better visibility and reduce "angular velocity perception"

UX Research & Design

Safety & confidence

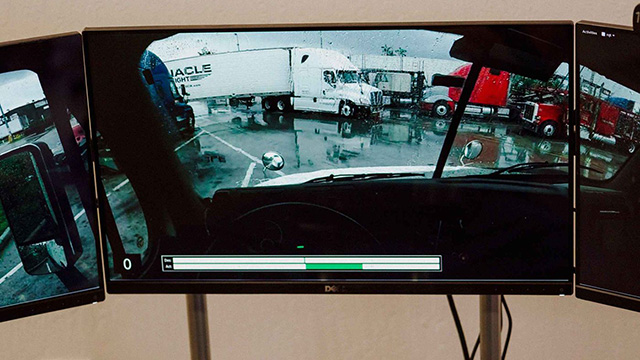

Teleoperation control station HUD

Safety driver HUD

Drivers needed complete situational awareness at all times

We were often testing in remote locations with poor cell service, resulting in video artifacts, frozen frames, and signal loss.

We immediately implemented error alerts and system-status indicators. They were prominently displayed in the teleoperation control station and safety driver HUD.

We also established a verbal communication protocol for handoffs. Drivers were required to say to each other: "I have control" — "you have control.”

UX Research

Driver training

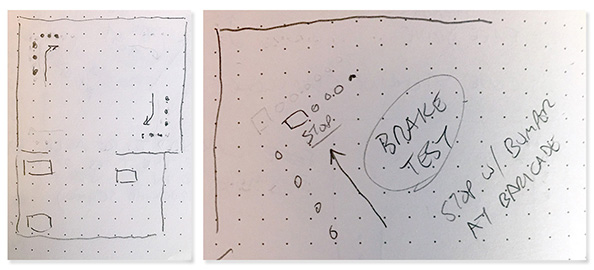

Training started with a video game

I installed American Truck Simulator in the control station, so new drivers could get used to the control station interface before handling a real truck.

Hands-on training was incremental. We started with slow figure-eights, handoffs, and safety drills. Drivers gradually learned how to apply the brakes and make turns without tossing everyone around in the truck.

Over time, we were able to move from a closed lot to low-traffic areas. After that was driving with a trailer, driving on the highway, on-ramps, off-ramps, and eventually in street traffic.

Waiting to make a left turn in Florida

SYSTEM Design

Designing for scale

The initial prototype was an all-in-one system, with the truck linked directly to the station. A developer had to push new code to allow us to switch to a different truck.

I separated the admin settings from the driver station. The driver station only contained the essentials: video, indicators, and controls. The admin station expanded to managing multiple trucks and tracking their location, speed, fuel levels, and cell signal strength.

A month before the demo, we opened a second teleoperation center in Florida, where we actually drove the trucks. Two operation centers required us to develop new safety checks and features to prevent conflicts between the two centers.

Delivery

A successful demo

On September 22nd, 2017 Starsky Robotics completed the world's first end-to-end autonomous trucking run

Selected works

Adobe ConsonantDesign library for the global leader in digital media

LookoutDesign library for the leading provider of data-centric cloud security

First PersonDesign libraries and technical design for a B2B discovery & design agency

VectraDesign library for the world leaders in AI security

OmnicellDesign library for the pioneers of autonomous pharmacy

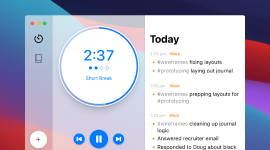

EpilogDesign & development of a smarter Pomodoro activity log

Disney Movies AnywhereAndroid design & development for the family entertainment giant

HD WidgetsDesign & development of a #1 ranked Android app

DayframeDesign & development of a social media photo frame app

App StatsDesign & development of a metrics app used by Google

CloudskipperDesign & development of a popular free music player

Starsky RoboticsUX research & design of a remote-driving system for an autonomous trucking start-up

Flash design & developmentAdobe, Honda, NFL, Zynga, and more (2003 – 2010)

ElementalCreative graphic design and illustration(1994 – 2003)