Starsky Robotics was an autonomous trucking company working to make trucks autonomous on the highway and remote controlled for the first and last mile

Synopsis

Starsky Robotics was working on an autonomous vehicle solution with a clever twist. They used a simple autonomous system for driving on the highway, and a remote-driving system (aka teleoperation) for everything else.

I led the teleoperation program at Starsky. When I started, the company had a rough prototype that could only drive 10 mph on a straight road. Within three months, I had redesigned the system to make it viable. We established two control centers—one in California and one in Florida—each equipped with a teleoperation station capable of remotely operating trucks in live traffic.

The challenge

The founders wanted a working demo in less than 100 days

We needed to demonstrate a “gate-to-gate” run, using only the teleoperation and autonomous systems.

The remote driver would operate the truck from the starting gate, onto the highway, and engage the autonomous system for the long stretch on the highway. During that time, the remote driver would instruct the autonomous system to change lanes as needed. Upon arrival, the remote-driver would take over, exit the highway, and drive the truck to the destination.

My role

Senior UX Designer, Product Manager

I was responsible for:

- Product ownership

- Product roadmap

- Software & hardware design

- UX R&D and driver peer-training

- Coordinating access and resources

INITIAL ASSESSMENT

The prototype required a team-effort to drive 10 mph on a straight road

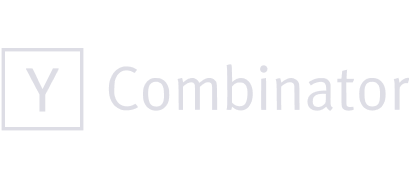

The prototype control station was a video game racing set-up with three small 27-inch screens. A 180° panorama, captured live by a camera above the driver in the cab, was split between the screens. The far left and right views were rear-facing cameras acting as rearview mirrors. A developer would sit in the chair and use the keyboard to make modifications between sessions.

The image from the 180° camera was not optimal. It was distorted and squished, especially on the right side. The HUD had only two indicators: speed (kph) and a pair of progress bars that compared the steering-wheel rotation between the controller and truck.

Driving tests took place in a busy open-office. The remote driver in the office and safety driver in the truck could not talk directly to each other. All communication was relayed by engineers on cell phones.

UX Design

Ergonomics

Driving figure eights in the new teleoperation station

The easiest way to drive adoption to an experimental system is to make it feel natural and familiar

My first step was to relocate the control station to a private room, so drivers could operate the truck without distractions.

Next, we upgraded all the equipment to make the driving experience more true to life. Three 50" HDTVs replaced the tiny 22" monitors. A hydraulic chair with armrests replaced the racing seat. Everything was modular, so it could be adjusted by the drivers for better ergonomics.

Microphones and speakers were installed in the control room and truck and routed via cell phones. The remote driver and safety driver spoke directly with each other, the connection left open throughout each session.

Poor video quality and visibility was an ongoing challenge. Some of my solutions included:

- Applying a matrix algorithm to warp the panoramic video, so it filled the displays more naturally and proportionally

- Using two separate cell service providers for redundancy and reduce the risk of drop out

- Repositioning the panoramic camera in the truck so it looked ahead, rather than down at the ground, so it no longer felt like the truck was driving too fast (a phenomenon known as angular velocity perception)

UX Research & Design

Safety & confidence

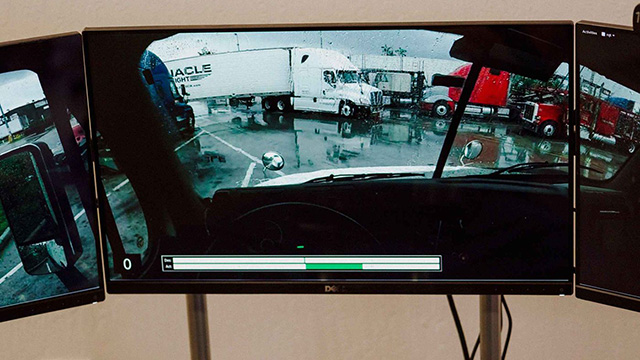

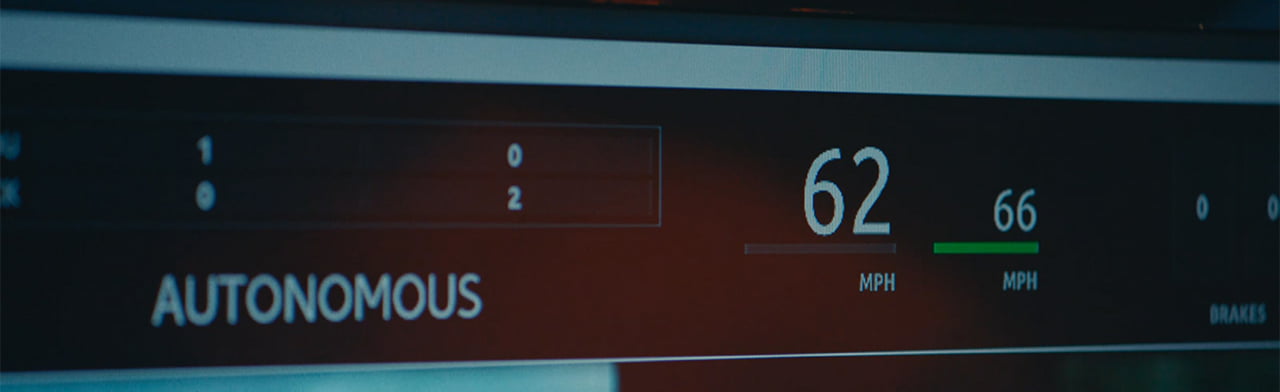

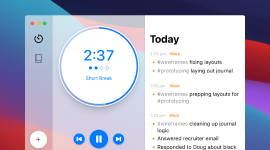

Teleoperation control station HUD

Safety driver HUD

Drivers needed complete situational awareness at all times

The control station was 2,600 miles from the truck, which often operated in remote areas with poor cell service. Video artifacts and frozen frames made teleoperation unreliable and difficult, while random dropouts and signal loss introduced serious safety risks.

To mitigate these challenges, I immediately implemented system-status indicators and error alerts, prominently displaying them in both the control station and the safety driver’s HUD in the cabin. When a connection issue occurred, the remote driver would shout "No signal!"—a cue for the safety driver to immediately disengage the system and take control.

We also established closed-loop communication protocols for handoffs. During every transition, drivers would explicitly confirm by stating, "Taking control," "You have control," and finally, "I have control." This procedure ensured absolute clarity over who was operating the truck at any given time—a protocol similar to those used in helicopter pilot training.

UX Research

Driver training

Training started with a video game

I installed American Truck Simulator in the control station. New drivers could get proficient with the control station interface playing a video game, before attempting to operate a truck in the real world.

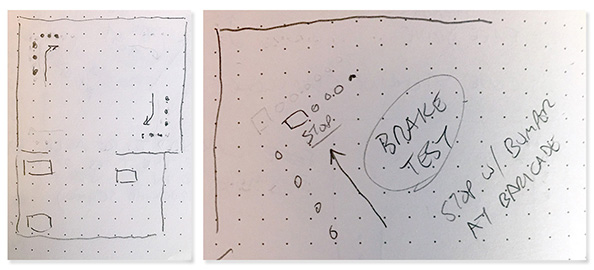

Hands-on training was incremental. We started with safety drills, handoffs, and slow circles around a large, private, open lot. They practiced how to make turns and apply the brakes smoothly, without tossing everyone around in the truck.

In time, they were able to move from the closed lot to low-traffic areas like back roads and rest stops. After that came operating a truck with a trailer, driving on the highway, on-ramps and off-ramps, and gradually into increasing densities of street traffic.

Remote driver waits to make a left turn during driving practice

SYSTEM Design

Designing for scale

The initial prototype was an all-in-one system, with driving controls and admin controls all in the same interface, and the control station linked directly to the truck. When we needed to control a different truck, a developer would literally have to update the code and deploy a new build.

I separated the admin controls from the driver station. The driver station now contained only the essentials for driving the truck: video, indicators, and controls. The admin station handled the connection to the truck, and evolved into a control center for connecting and managing multiple trucks, as well as tracking their location, speed, fuel levels, and cell signal strength.

The teleoperation room was in San Francisco, but the trucks were driving around in south Florida. Despite the 3000-mile difference, the system worked in real time, but not always perfectly. To reduce signal dropouts and other issues, we opened a second teleoperation center, close to the trucks in Florida.

Two operation centers suddenly presented the potential for conflicting connections. I quickly developed new features and safety checks to track every control station, truck and active connection, as well as prevent the stations from accidentally connecting to active trucks.

Delivery

A successful demo

On September 22nd, 2017 Starsky Robotics completed the world's first end-to-end autonomous trucking run

Selected works

Thank you for visiting ❤

Please let me know how I can help!